Author: Bakhmat M.

Key Takeaways for Business Leaders

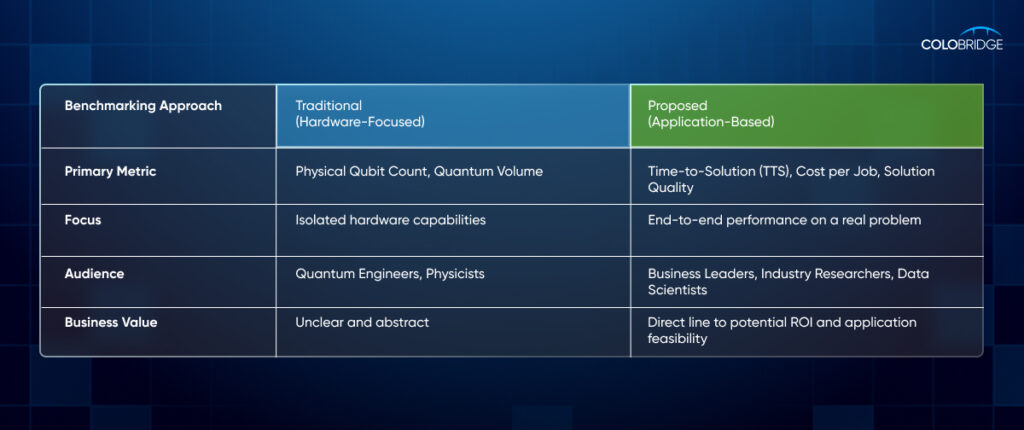

- Traditional metrics are not enough: Simply counting qubits is like judging a classical computer by its number of transistors alone. It doesn’t predict real-world performance.

- Focus on application, not just hardware: The most meaningful benchmarks test the entire system—hardware, software, and cloud services—on problems relevant to your industry.

- Key metrics to watch: Beyond speed, look at Time-to-Solution (TTS), validity of the result, user-friendliness of the platform, and total cost.

- The industry is moving towards standards: The goal is to create independent, application-based benchmarks that allow for fair comparison across different quantum providers and guide enterprise adoption.

Why is Benchmarking Quantum Computers So Difficult?

Evaluating the performance of a quantum computer is essential for tracking progress and determining its potential for solving real-world problems. However, unlike classical computers, quantum systems present unique and complex challenges for measurement.

- Limited Insight from Hardware Specs: Traditional benchmarks that focus on hardware limits (like physical qubit counts) fail to provide a clear picture of how a quantum computer will perform on a practical application. This often struggles to convey clear business value to non-quantum-literate industry leaders.

- The System is More Than Just Qubits: True performance depends on the entire quantum stack. The intricate interplay between the quantum hardware, the control software, and the classical cloud services that manage the workflow must be assessed as a whole.

- Connecting to Real-World Value: Benchmarks must be linked to application performance and presented in a way that is easily understood in a real-life business context, not just in a lab.

What is the Modern Approach to Quantum Benchmarking?

To address these challenges, industry experts, including teams at Google Quantum AI, are pioneering the move toward application-based benchmarks. These are designed to be independent of any specific quantum provider and built to measure performance on tasks relevant to business.

This new approach is founded on four key pillars:

- Standardized Metrics: Using metrics like Time-to-Solution (TTS) that consider the hidden computational costs of running a hybrid quantum-classical job.

- Real-World Datasets: Basing tests on use-cases from various industries, such as finance, logistics, and drug discovery.

- Reproducible Methods: Ensuring that benchmarking processes are transparent and can be reliably repeated across different platforms.

- Digestible Results: Presenting outcomes in a format that is clear and actionable for business leaders with little to no quantum background.

What is the Current Status and Future Outlook?

Today, hardware benchmarking still highlights the immense engineering challenges in building full-scale, fault-tolerant quantum computers. While companies, including Google, report physical qubit counts, achieving a large number of reliable logical qubits—which are corrected for errors—remains the critical next step for the industry.

Major players are setting ambitious public goals. For example, IBM’s “100×100 Challenge” aims to demonstrate by 2024 the ability to calculate unbiased observables from circuits with 100 qubits and a depth of 100 gate operations. Achieving this would represent a task far beyond the reach of today’s most powerful supercomputers.

At Google Quantum AI, our focus is on demonstrating a clear computational advantage on scientifically or commercially relevant tasks. Advancements in processor stability and error correction, as seen in our latest hardware, are paving the way for benchmarks that move beyond academic exercises to solve practical problems.

Effective benchmarking that prioritizes practical application performance is the key to unlocking the next phase of quantum adoption. It will guide businesses, focus research, and mature the entire quantum industry.

Frequently Asked Questions (FAQ)

1. What is the single most important metric when comparing quantum computers?

There is no single metric. A holistic view is crucial. However, Time-to-Solution (TTS) combined with Solution Quality is a powerful indicator for business applications, as it measures how long it takes to get a correct and useful answer for a specific cost.

2. How far away are we from having a standardized “quantum benchmark” like SPEC for classical CPUs?

The industry is actively working on it through consortiums and partnerships. While a universal standard is likely still a few years away, application-specific benchmarks for fields like chemistry simulation and financial modeling are emerging now.

3. What is the difference between a physical qubit and a logical qubit?

A physical qubit is the fundamental quantum component, which is inherently noisy and prone to errors. A logical qubit is a more robust, error-corrected unit composed of many physical qubits working together. The ability to create and scale logical qubits is the central challenge in building a fault-tolerant quantum computer.

4. Can I run these benchmarks on my own?

Yes. Most major cloud providers with quantum offerings, including Google, provide access and documentation for running performance benchmarks. This transparency is crucial for building trust and helping users make informed decisions.